Wget Download All Files

- Wget Download All Files In A Directory

- Wget Download All Files Of A Certain Type

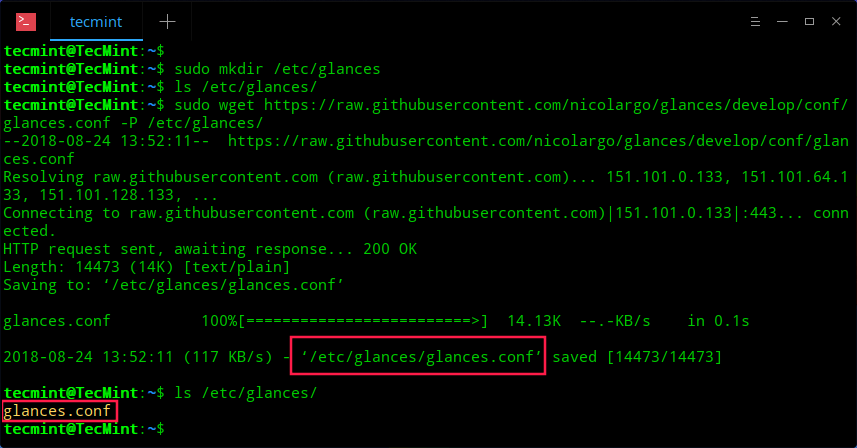

- Wget Download All Files In Folder

- Wget Download All Files From Url

- Wget Download All Files On Page

- Wget Download All Files And Folders

How do I download an entire website for offline viewing? How do I save all the MP3s from a website to a folder on my computer? How do I download files that are behind a login page? How do I build a mini-version of Google?

Wget utility is the best option to download files from internet. Wget can pretty much handle all complex download situations including large file downloads, recursive downloads, non-interactive downloads, multiple file downloads etc., In this article let us review how to use wget for various download scenarios using 15 awesome wget examples. The wget command allows you to download files over the HTTP, HTTPS and FTP protocols. It is a powerful tool that allows you to download files in the background, crawl websites, and resume interrupted downloads. Wget also features a number of options which allow you to download files over extremely bad network conditions.

Wget is a free utility – available for Mac, Windows and Linux (included) – that can help you accomplish all this and more. What makes it different from most download managers is that wget can follow the HTML links on a web page and recursively download the files. It is the same tool that a soldier had used to download thousands of secret documents from the US army’s Intranet that were later published on the Wikileaks website.

Spider Websites with Wget – 20 Practical Examples

Use wget to Recursively Download all Files of a Type, like jpg, mp3, pdf or others Written by Guillermo Garron Date: 2012-04-29 13:49:00 00:00. If you need to download from a site all files of an specific type, you can use wget to do it.

Wget is extremely powerful, but like with most other command line programs, the plethora of options it supports can be intimidating to new users. Thus what we have here are a collection of wget commands that you can use to accomplish common tasks from downloading single files to mirroring entire websites. It will help if you can read through the wget manual but for the busy souls, these commands are ready to execute.

1. Download a single file from the Internet

wget http://example.com/file.iso

Wget Download All Files In A Directory

2. Download a file but save it locally under a different name

wget ‐‐output-document=filename.html example.com

3. Download a file and save it in a specific folder

wget ‐‐directory-prefix=folder/subfolder example.com

4. Resume an interrupted download previously started by wget itself

wget ‐‐continue example.com/big.file.iso

5. Download a file but only if the version on server is newer than your local copy

wget ‐‐continue ‐‐timestamping wordpress.org/latest.zip

6. Download multiple URLs with wget. Put the list of URLs in another text file on separate lines and pass it to wget.

wget ‐‐input list-of-file-urls.txt

7. Download a list of sequentially numbered files from a server

wget http://example.com/images/{1..20}.jpg

8. Download a web page with all assets – like stylesheets and inline images – that are required to properly display the web page offline.

wget ‐‐page-requisites ‐‐span-hosts ‐‐convert-links ‐‐adjust-extension http://example.com/dir/file

Mirror websites with Wget

9. Download an entire website including all the linked pages and files

wget ‐‐execute robots=off ‐‐recursive ‐‐no-parent ‐‐continue ‐‐no-clobber http://example.com/

10. Download all the MP3 files from a sub directory

wget ‐‐level=1 ‐‐recursive ‐‐no-parent ‐‐accept mp3,MP3 http://example.com/mp3/

11. Download all images from a website in a common folder

wget ‐‐directory-prefix=files/pictures ‐‐no-directories ‐‐recursive ‐‐no-clobber ‐‐accept jpg,gif,png,jpeg http://example.com/images/

12. Download the PDF documents from a website through recursion but stay within specific domains.

wget ‐‐mirror ‐‐domains=abc.com,files.abc.com,docs.abc.com ‐‐accept=pdf http://abc.com/

13. Download all files from a website but exclude a few directories.

wget ‐‐recursive ‐‐no-clobber ‐‐no-parent ‐‐exclude-directories /forums,/support http://example.com

Wget for Downloading Restricted Content

Wget can be used for downloading content from sites that are behind a login screen or ones that check for the HTTP referer and the User Agent strings of the bot to prevent screen scraping.

14. Download files from websites that check the User Agent and the HTTP Referer

wget ‐‐refer=http://google.com ‐‐user-agent=”Mozilla/5.0 Firefox/4.0.1″ http://nytimes.com

15. Download files from a password protected sites

wget ‐‐http-user=labnol ‐‐http-password=hello123 http://example.com/secret/file.zip

16. Fetch pages that are behind a login page. You need to replace user and password with the actual form fields while the URL should point to the Form Submit (action) page.

wget ‐‐cookies=on ‐‐save-cookies cookies.txt ‐‐keep-session-cookies ‐‐post-data ‘user=labnol&password=123’ http://example.com/login.php

wget ‐‐cookies=on ‐‐load-cookies cookies.txt ‐‐keep-session-cookies http://example.com/paywall

Retrieve File Details with wget

17. Find the size of a file without downloading it (look for Content Length in the response, the size is in bytes)

wget ‐‐spider ‐‐server-response http://example.com/file.iso

18. Download a file and display the content on screen without saving it locally.

wget ‐‐output-document – ‐‐quiet google.com/humans.txt

19. Know the last modified date of a web page (check the Last Modified tag in the HTTP header).

wget ‐‐server-response ‐‐spider http://www.labnol.org/

20. Check the links on your website to ensure that they are working. The spider option will not save the pages locally.

wget ‐‐output-file=logfile.txt ‐‐recursive ‐‐spider http://example.com

Also see: Essential Linux Commands

Wget – How to be nice to the server?

The wget tool is essentially a spider that scrapes / leeches web pages but some web hosts may block these spiders with the robots.txt files. Also, wget will not follow links on web pages that use the rel=nofollow attribute.

You can however force wget to ignore the robots.txt and the nofollow directives by adding the switch ‐‐execute robots=off to all your wget commands. If a web host is blocking wget requests by looking at the User Agent string, you can always fake that with the ‐‐user-agent=Mozilla switch.

The wget command will put additional strain on the site’s server because it will continuously traverse the links and download files. A good scraper would therefore limit the retrieval rate and also include a wait period between consecutive fetch requests to reduce the server load.

wget ‐‐limit-rate=20k ‐‐wait=60 ‐‐random-wait ‐‐mirror example.com

In the above example, we have limited the download bandwidth rate to 20 KB/s and the wget utility will wait anywhere between 30s and 90 seconds before retrieving the next resource.

Finally, a little quiz. What do you think this wget command will do?

wget ‐‐span-hosts ‐‐level=inf ‐‐recursive dmoz.org

You'll also like:

Newer isn’t always better, and the wget command is proof. First released back in 1996, this application is still one of the best download managers on the planet. Whether you want to download a single file, an entire folder, or even mirror an entire website, wget lets you do it with just a few keystrokes.

Of course, there’s a reason not everyone uses wget: it’s a command line application, and as such takes a bit of time for beginners to learn. Here are the basics, so you can get started.

How to Install wget

Before you can use wget, you need to install it. How to do so varies depending on your computer:

Wget Download All Files Of A Certain Type

- Most (if not all) Linux distros come with wget by default. So Linux users don’t have to do anything!

- macOS systems do not come with wget, but you can install command line tools using Homebrew. Once you’ve set up Homebrew, just run

brew install wgetin the Terminal. - Windows users don’t have easy access to wget in the traditional Command Prompt, though Cygwin provides wget and other GNU utilities, and Windows 10’s Ubuntu’s Bash shell also comes with wget.

Once you’ve installed wget, you can start using it immediately from the command line. Let’s download some files!

Download a Single File

Let’s start with something simple. Copy the URL for a file you’d like to download in your browser.

Now head back to the Terminal and type wget followed by the pasted URL. The file will download, and you’ll see progress in realtime as it does.

RELATED:How to Manage Files from the Linux Terminal: 11 Commands You Need to Know

Note that the file will download to your Terminal’s current folder, so you’ll want to cd to a different folder if you want it stored elsewhere. If you’re not sure what that means, check out our guide to managing files from the command line. The article mentions Linux, but the concepts are the same on macOS systems, and Windows systems running Bash.

Continue an Incomplete Download

If, for whatever reason, you stopped a download before it could finish, don’t worry: wget can pick up right where it left off. Just use this command:

wget -c file

Wget Download All Files In Folder

The key here is -c, which is an “option” in command line parlance. This particular option tells wget that you’d like to continue an existing download.

Mirror an Entire Website

If you want to download an entire website, wget can do the job.

wget -m http://example.com

By default, this will download everything on the site example.com, but you’re probably going to want to use a few more options for a usable mirror.

--convert-linkschanges links inside each downloaded page so that they point to each other, not the web.--page-requisitesdownloads things like style sheets, so pages will look correct offline.--no-parentstops wget from downloading parent sites. So if you want to download http://example.com/subexample, you won’t end up with the parent page.

Combine these options to taste, and you’ll end up with a copy of any website that you can browse on your computer.

Note that mirroring an entire website on the modern Internet is going to take up a massive amount of space, so limit this to small sites unless you have near-unlimited storage.

Download an Entire Directory

Wget Download All Files From Url

If you’re browsing an FTP server and find an entire folder you’d like to download, just run:

wget -r ftp://example.com/folder

The r in this case tells wget you want a recursive download. You can also include --noparent if you want to avoid downloading folders and files above the current level.

Download a List of Files at Once

If you can’t find an entire folder of the downloads you want, wget can still help. Just put all of the download URLs into a single TXT file.

then point wget to that document with the -i option. Like this:

wget -i download.txt

Do this and your computer will download all files listed in the text document, which is handy if you want to leave a bunch of downloads running overnight.

A Few More Tricks

Wget Download All Files On Page

We could go on: wget offers a lot of options. But this tutorial is just intended to give you a launching off point. To learn more about what wget can do, type man wget in the terminal and read what comes up. You’ll learn a lot.

Having said that, here are a few other options I think are neat:

- If you want your download to run in the background, just include the option

-b. - If you want wget to keep trying to download even if there is a 404 error, use the option

-t 10. That will try to download 10 times; you can use whatever number you like. - If you want to manage your bandwidth, the option

--limit-rate=200kwill cap your download speed at 200KB/s. Change the number to change the rate.

There’s a lot more to learn here. You can look into downloading PHP source, or setting up an automated downloader, if you want to get more advanced.

READ NEXTWget Download All Files And Folders

- › How to Use Text Editing Gestures on Your iPhone and iPad

- › Windows 10’s BitLocker Encryption No Longer Trusts Your SSD

- › How to Disable or Enable Tap to Click on a PC’s Touchpad

- › How HTTP/3 and QUIC Will Speed Up Your Web Browsing

- › Motherboards Explained: What Are ATX, MicroATX, and Mini-ITX?